Tldr

- When a matrix is square and invertible, you know you can compute the inverse . But when the matrix is not square (e.g., tall or wide) or singular (non-invertible), you can’t compute a normal inverse.

- Instead, you can compute something called a pseudoinverse, denoted as .

Moore-Penrose Conditions

- Pseudoinverse exists for any matrix, and acts like an inverse “as much as possible.”

- It helps solve the least square problem even when is not nice.

- If is a full column rank matrix and , then gives the least-squares solution. | overdetermined system.

- If is a full row rank matrix and , there are infinitely many solutions; but gives the minimum norm solution. | underdetermined system.

- If A is a square matrix, .

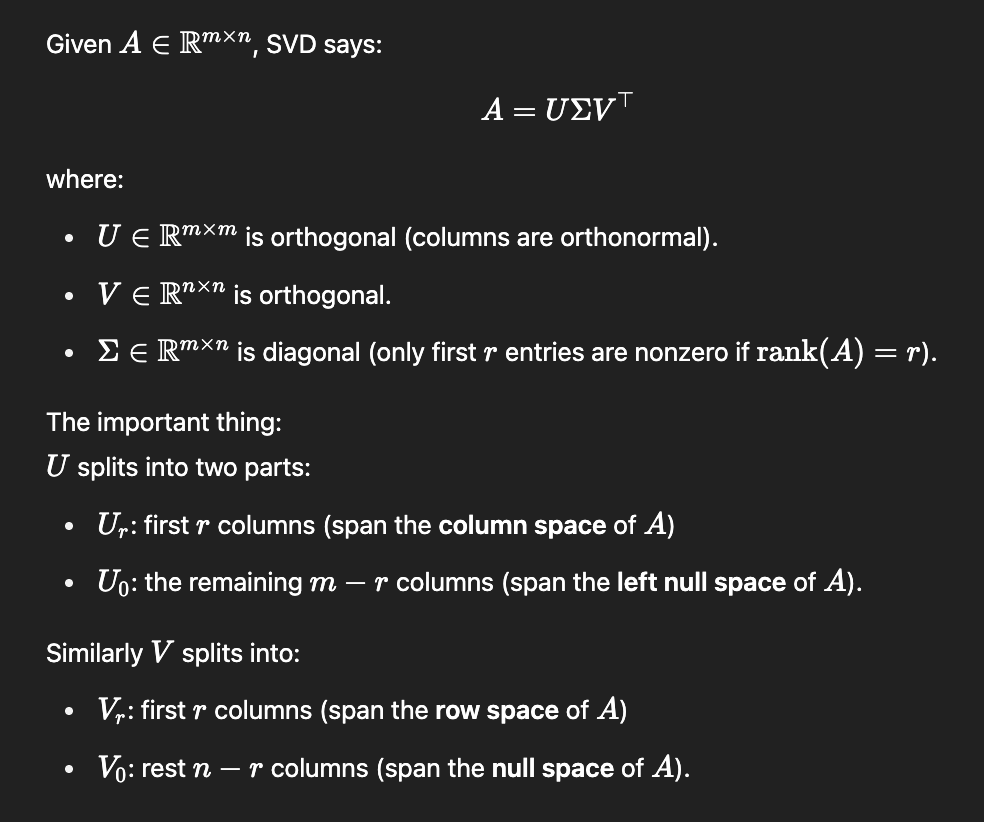

Calculating the Pseudoinverse of a Matrix.

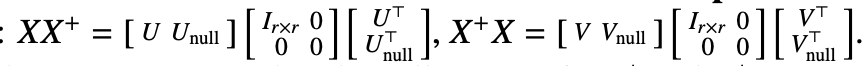

For a given matrix , the pseudo-inverse of it is defined as:

is obtained by taking the reciprocal of each non-zero singular value in and transpose it.

If

Warning

If we use compact SVD, then .

I am a little bit lazy with typing this. But here is a good summary by ChatGPT regarding the connection between Pseudoinverse and four fundamental subspaces.

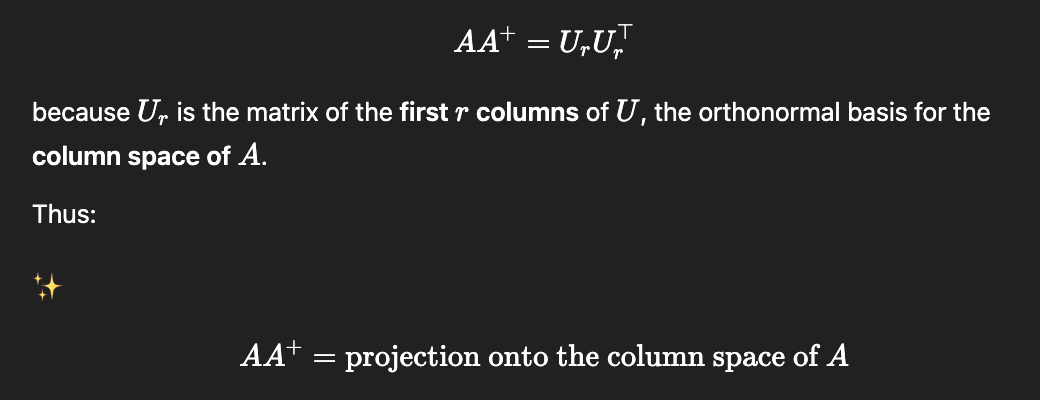

Important

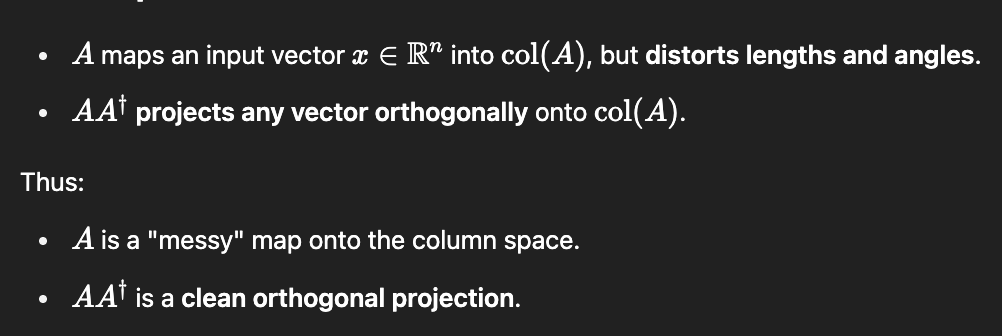

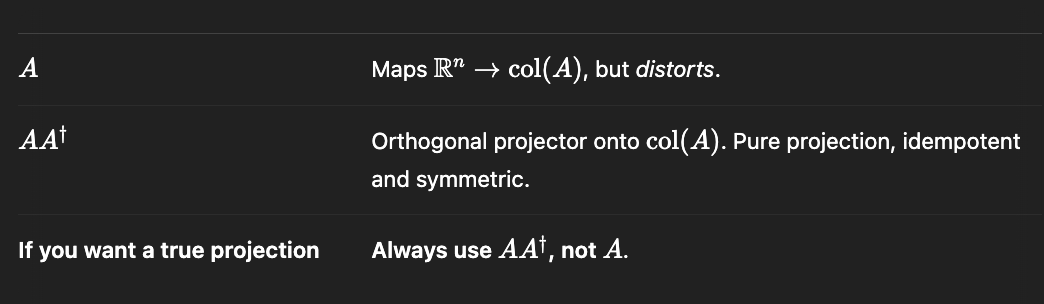

If both and project onto the column space of , how are they different?

- Given a Compact SVD ,

- Null(X) = Null() = Span().

- Row(X) = Row() = Span().

- Null() = Null() = Null()

- Null() = Null() = Null()

- Row() = Col()

- Col() = Row()

- all have the same rank

r.

Notice that this expression is the same as eigenvalue decomposition form.

Therefore, we can say that the first r columns of are eigenvectors of with eigenvalues 1, and the rest are eigenvectors with eigenvalue 0.